A deep fake can give attackers the ability to accurately create convincing media that could lead employees to take dangerous actions. Audio and video formats are getting more and more convincing, with uses ranging from a wild Christmas message from the Queen to a family member calling you in a panic.

In our latest Cyber Security Awareness Forum, I put together a panel of experienced cyber security leaders to talk about the effects a deep fake can have on businesses. Let’s meet the panel:

Victor Bittner (VB) – A first-time panelist, Victor is the CEO of Cyber Security Canada & hold an Accredited Certification Body for Management Systems.

Fletus Poston (FP) – A security champion, Fletus is a Senior Manager of Security Operations at CrashPlan®. CrashPlan® provides peace of mind through secure, scalable, straightforward endpoint data backup for any organization.

Michelle L. (ML) – Michelle is the Cyber Security Awareness Lead at Channel 4 in the UK. Channel 4 is a publicly-owned and commercially-funded UK public service broadcaster.

Ryan Healey-Ogden (RH) – Ryan is Click Armor’s Business Development Director. He also teaches at the University of Toronto College Cybersecurity Bootcamp.

And myself, Scott Wright (SW), CEO of Click Armor, the sponsor for this session. Now let’s get into protecting our businesses and people from the trickery of deep fakes.

What forms of deep fake would attackers use on employees?

VB: It’s the false information that is spread by deep fakes that are the real problem. You’re seeing a lot of fake images happening in the news, in the newspapers and in the stills.

Sometimes they’re blatantly easy to see and sometimes they’re so good that you really need the file itself to decipher if it’s a fake or not. And that’s going to be a social issue in the future.

SW: On the corporate side, you can think about the more acute or targeted attacks that deep fakes can use. For example, voicemails from the boss to do things like go buy gift cards.

"It’s been a “super-fantastic” experience to see people learning and talking about security threats."

For just $325 USD, you can run a 6 week, automated program for gamified phishing awareness training and challenges. (Limited time offer. Normally valued at $999 USD)

Use Promo Code: 6WEEKS

Then, like it was said, general social trust. Maybe somebody declares war or a public persona starts to amass some response from their followers for things like crypto scams or worse.

FP: I think Scott hit it spot on. If they can get a video posted in a place that employees will see it and it’s a senior leadership or someone of any value and they can get the mannerisms correct to say, “Hey – In the summer, we’re going to a 4-day schedule and we would love for you to have every Friday off. All you have to do is go to this website and sign up.”

Most people are going to get signed up to have every Friday off. They’ll put in their password and there you go.

RH: Emotional voicemails are happening. And then those are going to be the harder things to kind of decipher, especially if they then start to hit those emotional cues. There are already big scams going around where they trick families into believing their children or grandkids are in trouble and they need to send two grand and bam, they do it.

I also want to add brand reputation there. A lot of organizations could have their entire brand smeared by a deep fake video of a CEO being posted to Twitter saying, “I am no longer confident in my company’s direction”, and the stock can take a dive in a quick second. And those thousands of people could be unemployed. That is how quickly things could happen.

How should you educate employees on deep fakes?

FP: It’s about managing human risk, which is another way of saying our security awareness. You need everyone to think that “once I can’t physically see you, I’m no longer going to trust what I hear.”

And the sad part about that is we’re in a remote workforce. We’re dealing with people across the globe right now. I may never meet some of you, but do business with you day in and day out. So from that implication, I’m going to have to determine a safe word or something that we know is like a one-time password. So I validate who they are when I call them by asking a question they would know the answer to, that we pre-shared.

And it’s silly, but we make it into a game. Like you can say, “For Monday’s call we’re going to say our favourite pizza topping.” And then once you use that phrase, you never use that phrase again.

RH: I think deep fakes should just be in their own individual module. I know that A.I. and deep fakes go hand in hand, but there needs to be attention put just on deep fakes because there are so many different avenues of attack within a deep fake and there are so many other ramifications from that as well.

ML: I think the advice that we give and the most successful advice I ever give is just to take time. Just take your time to check things and carve out time in your day. Don’t feel rushed to something without analyzing it first.

Unfortunately, a lot of work environments nowadays are remote, fast-paced, and stressful. So, it’s not hard to believe that if someone texted someone to get gift cards they might scramble to get them.

So, the best way to improve security is to really work on your culture in general. Make sure that people do spend that time with each other so that they aren’t just expected to call up and have that kind of very kind of confidential discussion and or transcend things and spend money.

VB: What I’m trying to teach everyone around me is that there are always two truths. There’s the truth, you see, and the truth the other people see. And you got to see both sides. So you’ve got to realize that everyone sees things and it’s their truth. And you have to decide what your truth is.

SW: The attackers are realizing how much they can make from these deep fake scams and are going to spend more. They’re going to invest more in open-source intelligence. And they’re going to spend more than people believe anybody would spend in order to set up this kind of scam.

So I think when it comes to educating employees, we have to let them know that these tools not only exist but there are attackers who will invest a lot to get at whatever the organization has, whether it’s its assets or its access to supply chains.

What could be the long-term effects of deep fakes?

VB: We’ve been seeing, like in the 2016 election, it has the potential it has swayed the public. And people believe what they see and people believe what they read. And I know people believe what they read because I used to write for two magazines about technological advancement and everything. I used to complain a lot about the failures. And if I said something, they believed it without a question.

So I think the social impact is going to be the big changer for the world itself, for awareness.

ML: I think great companies are going to be making use of these products just like we’re using ChatGPT. I think we’re going to end up paying for these kinds of subscriptions so we can make that kind of content to then put it into our training to show how easy it is.

RH: We’ve seen more advanced phishing attacks with ChatGPT. Now we’re going to see that with Deepfake voice and video there are social engineering attacks. That’s already happening. People are getting phone calls all the time from suspected loved ones asking for help and money. So that is going to spill into the workplace.

–

Easy access to creating a deep fake video or aduio is here and attackers will do more than you think to get their hands on it. Although it’s a scary and quickly growing technology, it’s still important to train and give your employees the awareness they need in order to protect themselves and your organization.

Have training modules on deep fakes, create safe words, and improve your security culture to help protect your organization. Want to hear more of our advice? Watch the full CSAF #19 on YouTube.

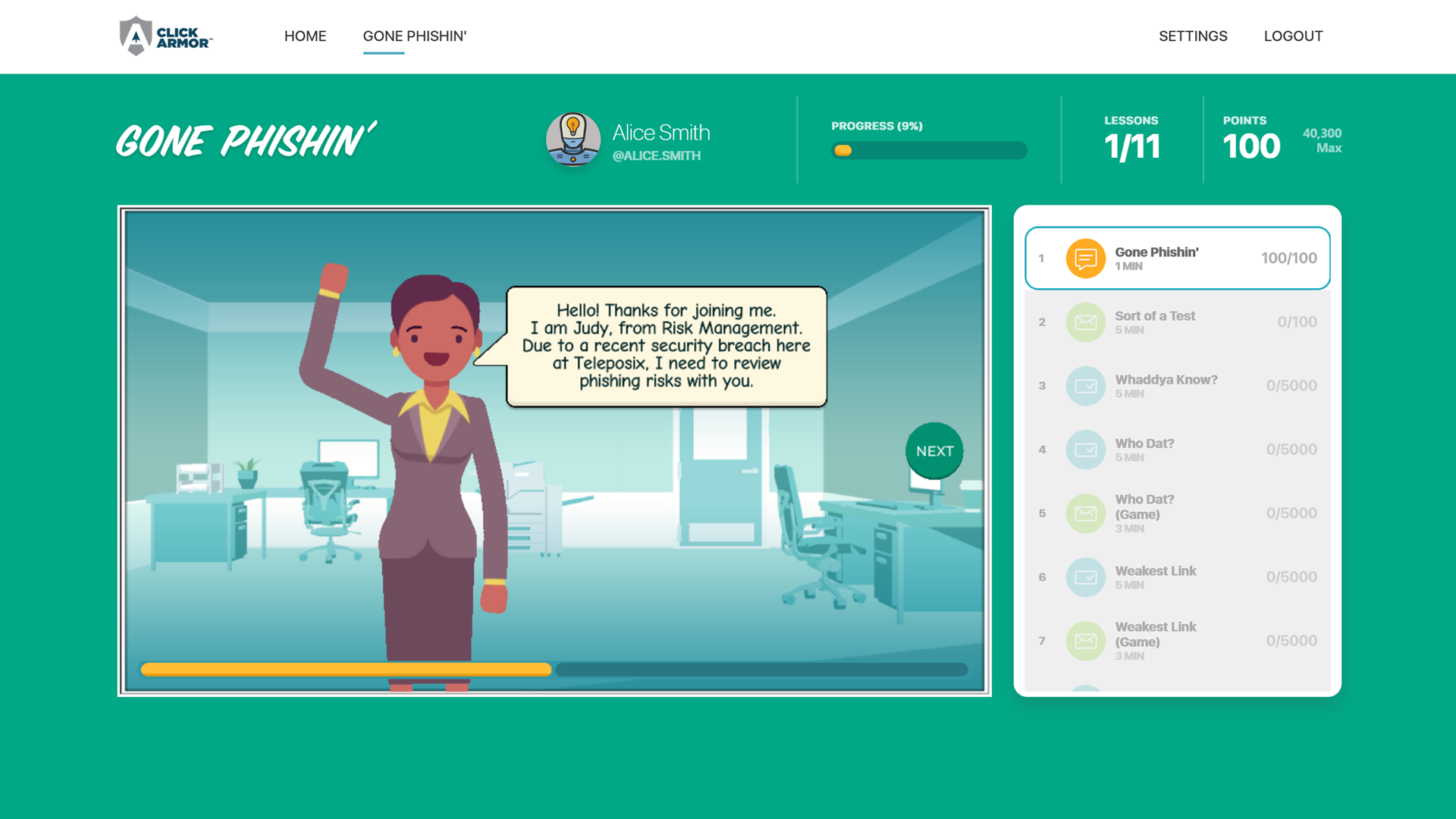

Click Armor is the first highly interactive security awareness platform, with engaging foundational courses and 3-minute weekly challenges that employees love. We offer content on everything from security basics, phishing and social engineering to passwords and privacy.

Even if your organization already has a solution, there’s a high likelihood that some employees are still not engaging and are exposing your systems and information to cyberthreats. Click Armor offers a special “remediation” package that complements existing solutions that don’t offer any relevant content for people who need a different method of awareness training.

Scott Wright is CEO of Click Armor, the gamified simulation platform that helps businesses avoid breaches by engaging employees to improve their proficiency in making decisions for cyber security risk and corporate compliance. He has over 20 years of cyber security coaching experience and was creator of the Honey Stick Project for Smartphones as a demonstration in measuring human vulnerabilities.