Just because an attacker does it, doesn’t mean you should, too.

This may seem like an obvious statement. But think about it.

Compare this scenario’s logic to using an unfair phishing test message:

1. Attackers may use a gun to rob you in a dark alley

2. This attack will be successful 99% of the time

3. To prepare for this, you should face an unexpected attacker with a fake gun, regularly

This clearly does not sound like good advice.

Photo by Luis Villasmil on Unsplash

It’s been a “super-fantastic” experience to see people learning and talking about security threats.

For just $325 USD, you can run a 6 week, automated program for phishing, social engineering and working from home. (Normally valued at $450 USD)

Use Promo Code: 6WEEKS

This is the logic used in many live phishing tests, where penalties are threatened, or bonuses are promised, without employees knowing it’s a simulation. You can come up with equally unwise arguments for almost any social engineering or phishing attack scenario.

It’s all done with the rationale that “attackers will do it, so people need to experience it in a safe environment before it happens in real life.”

This is a reckless stance to take on security awareness. Employees shouldn’t be put in these kinds of potentially damaging situations without their knowledge and permission.

People can learn more effectively, and safely, in an immersive simulation environment. There is no need to risk negative impacts on morale, legal liabilities and reputational damage by creating phishing tests that use unfair and potentially damaging tactics.

Keep phishing tests fair and safe.

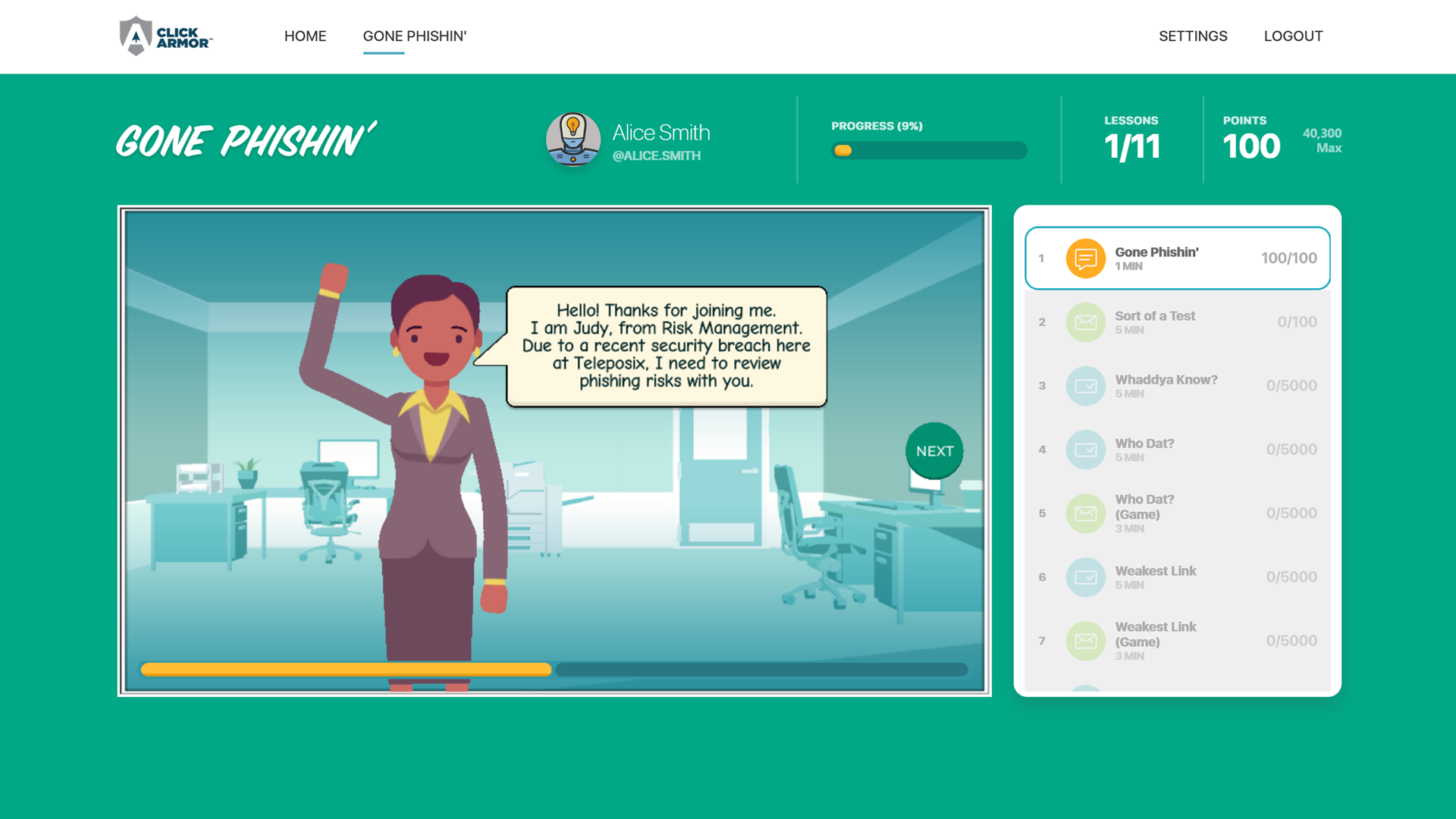

Scott Wright is CEO of Click Armor, the gamified simulation platform that helps businesses avoid breaches by engaging employees to improve their proficiency in making decisions for cyber security risk and corporate compliance. He has over 20 years of cyber security coaching experience and was creator of the Honey Stick Project for Smartphones as a demonstration in measuring human vulnerabilities.